PRESS RELEASE

17 May 2022

Apple previews innovative accessibility features combining the power of hardware, software, and machine learning

Software features coming later this year offer users with disabilities new tools for navigation, health, communication, and more

CUPERTINO, CALIFORNIA Apple today previewed innovative software features that introduce new ways for users with disabilities to navigate, connect, and get the most out of Apple products. These powerful updates combine the company’s latest technologies to deliver unique and customisable tools for users, and build on Apple’s long-standing commitment to making products that work for everyone.

Using advancements across hardware, software, and machine learning, people who are blind or low vision can use their iPhone and iPad to navigate the last few feet to their destination with Door Detection; users with physical and motor disabilities who may rely on assistive features like Voice Control and Switch Control can fully control Apple Watch from their iPhone with Apple Watch Mirroring; and the Deaf and hard of hearing community can follow Live Captions on iPhone, iPad, and Mac. Apple is also expanding support for its industry-leading screen reader VoiceOver with over 20 new languages and locales. These features will be available later this year with software updates across Apple platforms.

“Apple embeds accessibility into every aspect of our work, and we are committed to designing the best products and services for everyone,” said Sarah Herrlinger, Apple’s senior director of Accessibility Policy and Initiatives. “We’re excited to introduce these new features, which combine innovation and creativity from teams across Apple to give users more options to use our products in ways that best suit their needs and lives.”

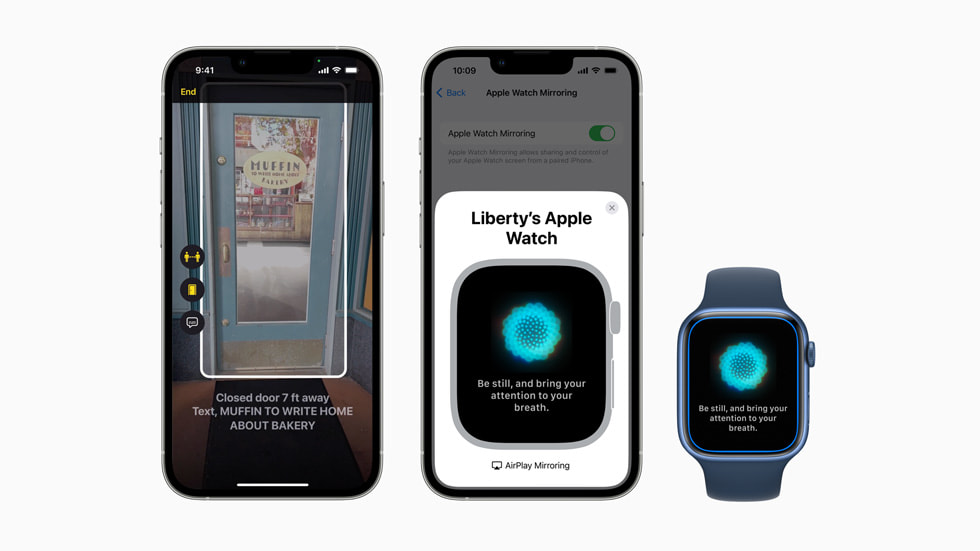

Door Detection for Users Who Are Blind or Low Vision

Apple is introducing Door Detection, a cutting-edge navigation feature for users who are blind or low vision. Door Detection can help users locate a door upon arriving at a new destination, understand how far they are from it, and describe door attributes — including if it is open or closed, and when it’s closed, whether it can be opened by pushing, turning a knob, or pulling a handle. Door Detection can also read signs and symbols around the door, like the room number at an office, or the presence of an accessible entrance symbol. This new feature combines the power of LiDAR, camera, and on-device machine learning, and will be available on iPhone and iPad models with the LiDAR Scanner.1

Door Detection will be available in a new Detection Mode within Magnifier, Apple’s built-in app supporting blind and low vision users. Door Detection, along with People Detection and Image Descriptions, can each be used alone or simultaneously in Detection Mode, offering users with vision disabilities a go-to place with customisable tools to help navigate and access rich descriptions of their surroundings. In addition to navigation tools within Magnifier, Apple Maps will offer sound and haptics feedback for VoiceOver users to identify the starting point for walking directions.

Door Detection is a powerful feature for users who are blind or low vision to navigate the last few feet to their destination.

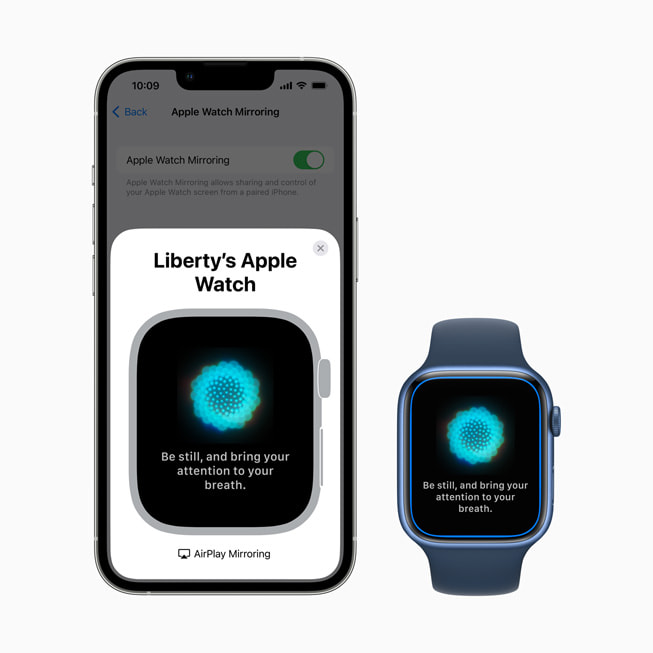

Advancing Physical and Motor Accessibility for Apple Watch

Apple Watch becomes more accessible than ever for people with physical and motor disabilities with Apple Watch Mirroring, which helps users control Apple Watch remotely from their paired iPhone. With Apple Watch Mirroring, users can control Apple Watch using iPhone’s assistive features like Voice Control and Switch Control, and use inputs including voice commands, sound actions, head tracking, or external Made for iPhone switches as alternatives to tapping the Apple Watch display. Apple Watch Mirroring uses hardware and software integration, including advances built on AirPlay, to help ensure users who rely on these mobility features can benefit from unique Apple Watch apps like Blood Oxygen, Heart Rate, Mindfulness, and more.2

Plus, users can do even more with simple hand gestures to control Apple Watch. With new Quick Actions on Apple Watch, a double-pinch gesture can answer or end a phone call, dismiss a notification, take a photo, play or pause media in the Now Playing app, and start, pause, or resume a workout. This builds on the innovative technology used in AssistiveTouch on Apple Watch, which gives users with upper body limb differences the option to control Apple Watch with gestures like a pinch or a clench without having to tap the display.

Live Captions Come to iPhone, iPad, and Mac for Deaf and Hard of Hearing Users

For the Deaf and hard of hearing community, Apple is introducing Live Captions on iPhone, iPad, and Mac.3 Users can follow along more easily with any audio content — whether they are on a phone or FaceTime call, using a video conferencing or social media app, streaming media content, or having a conversation with someone next to them. Users can also adjust font size for ease of reading. Live Captions in FaceTime attribute auto-transcribed dialogue to call participants, so group video calls become even more convenient for users with hearing disabilities. When Live Captions are used for calls on Mac, users have the option to type a response and have it spoken aloud in real time to others who are part of the conversation. And because Live Captions are generated on device, user information stays private and secure.

Live Captions on iPhone, iPad, and Mac make it easier to follow along with any audio content.

VoiceOver Adds New Languages and More

VoiceOver, Apple’s industry-leading screen reader for blind and low vision users, is adding support for more than 20 additional locales and languages, including Bengali, Bulgarian, Catalan, Ukrainian, and Vietnamese.4 Users can also select from dozens of new voices that are optimised for assistive features across languages. These new languages, locales, and voices will also be available for Speak Selection and Speak Screen accessibility features. Additionally, VoiceOver users on Mac can use the new Text Checker tool to discover common formatting issues such as duplicative spaces or misplaced capital letters, which makes proofreading documents or emails even easier.

Additional Features

- With Buddy Controller, users can ask a care provider or friend to help them play a game; Buddy Controller combines any two game controllers into one, so multiple controllers can drive the input for a single player.

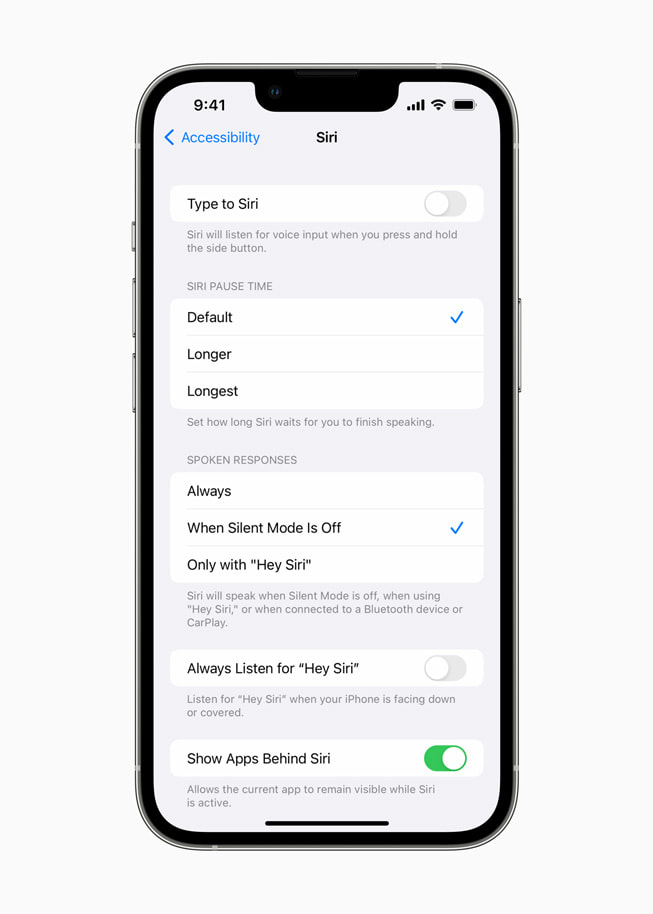

- With Siri Pause Time, users with speech disabilities can adjust how long Siri waits before responding to a request.

- Voice Control Spelling Mode gives users the option to dictate custom spellings using letter-by-letter input.5

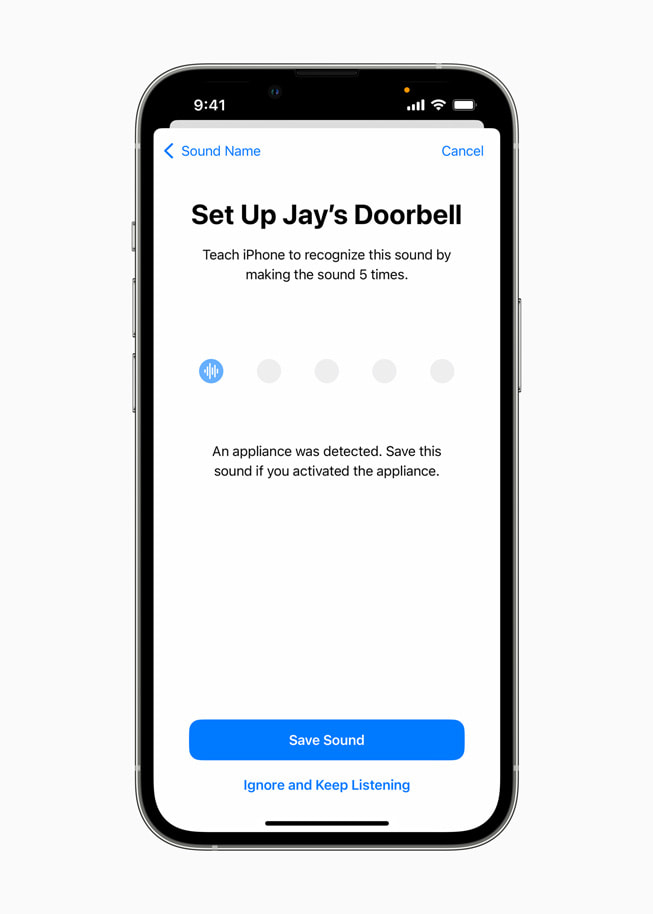

- Sound Recognition can be customised to recognise sounds that are specific to a person’s environment, like their home’s unique alarm, doorbell, or appliances.

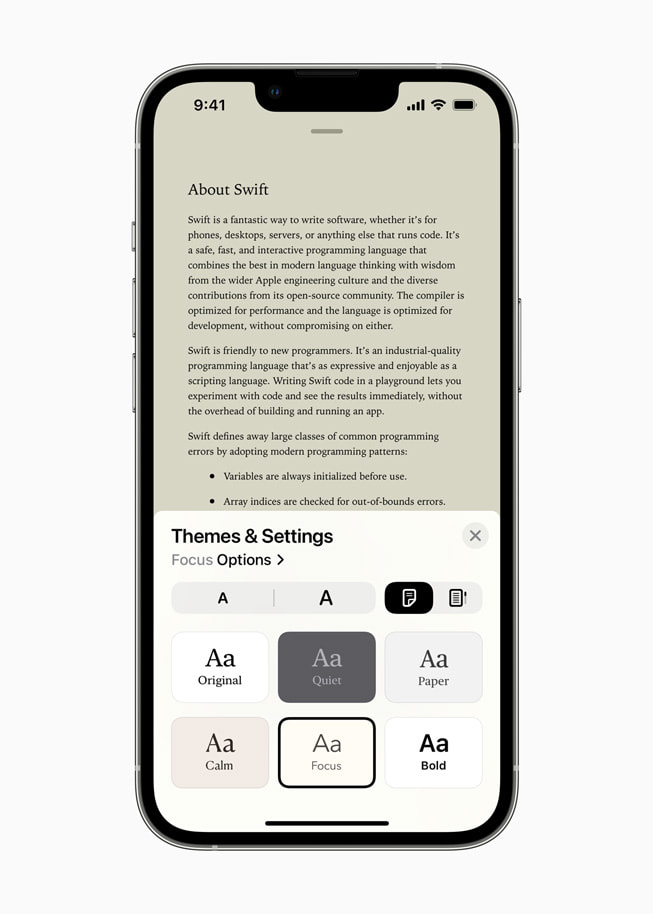

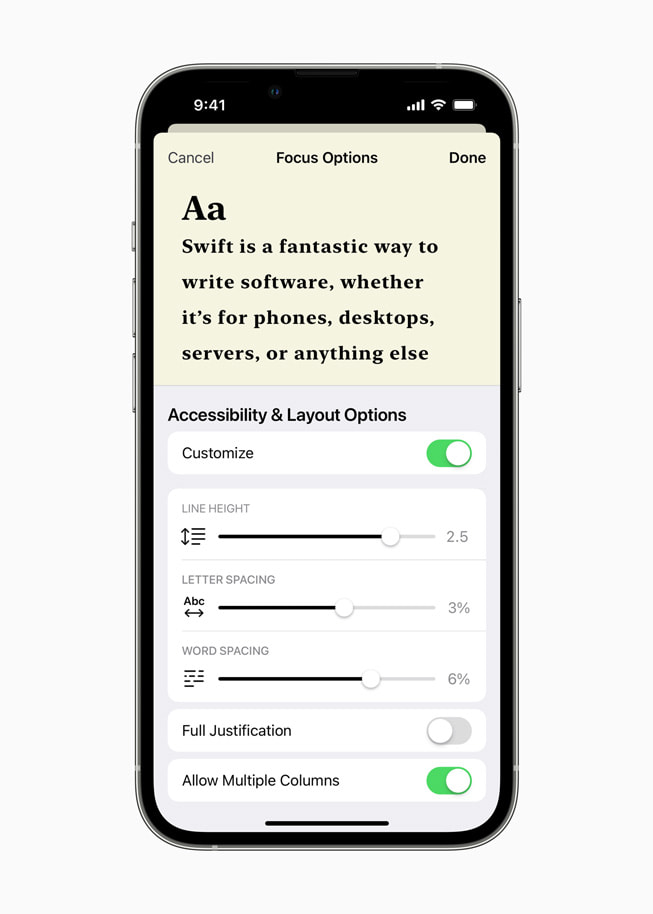

- The Apple Books app will offer new themes, and introduce customisation options such as bolding text and adjusting line, character, and word spacing for an even more accessible reading experience.

Celebrating Global Accessibility Awareness Day

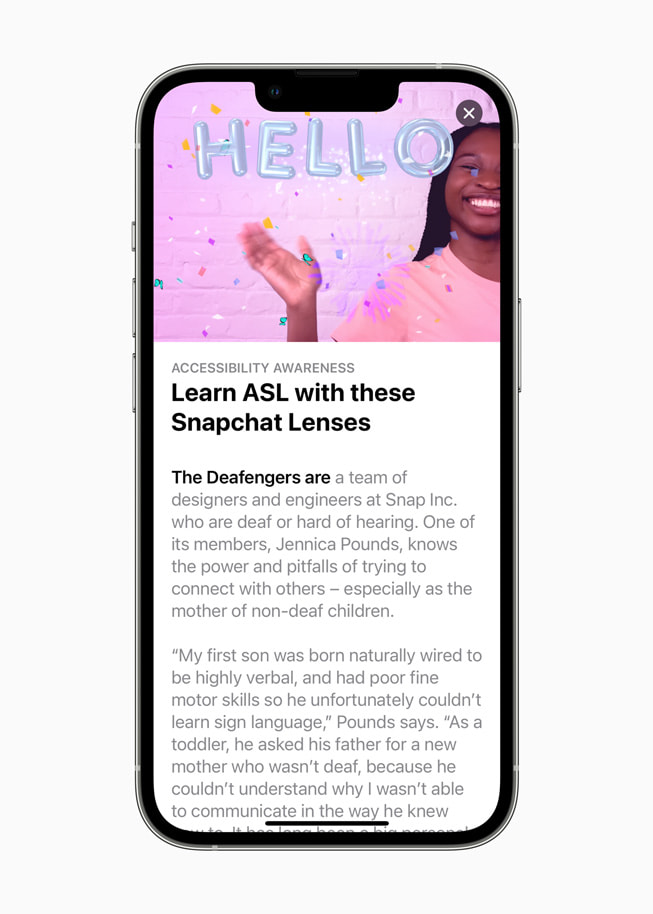

This week, Apple is celebrating Global Accessibility Awareness Day with special sessions, curated collections, and more:

- SignTime will launch in Canada on May 19, to connect Apple Store and Apple Support customers with on-demand American Sign Language (ASL) interpreters. SignTime is already available for customers in the US using ASL, the UK using British Sign Language (BSL), and France using French Sign Language (LSF).

- Apple Store locations around the world are offering live sessions throughout the week to help customers discover accessibility features on iPhone, and Apple Support social channels are showcasing how-to content.

- The Accessibility Assistant shortcut is coming to the Shortcuts app on Mac and Apple Watch this week to help recommend accessibility features based on user preferences.

- This week in Apple Fitness+, trainer Bakari Williams uses ASL to highlight the features available to users that are part of an ongoing effort to make fitness more accessible to all, including Audio Hints, which are short descriptive verbal cues to support users who are blind or low vision, and Time to Walk and Time to Run episodes becoming “Time to Walk or Push” and “Time to Run or Push” for wheelchair users. Additionally, Fitness+ trainers incorporate ASL into every workout and meditation, all videos include closed captioning in six languages, and trainers demonstrate modifications in each workout so users at different levels can join in.

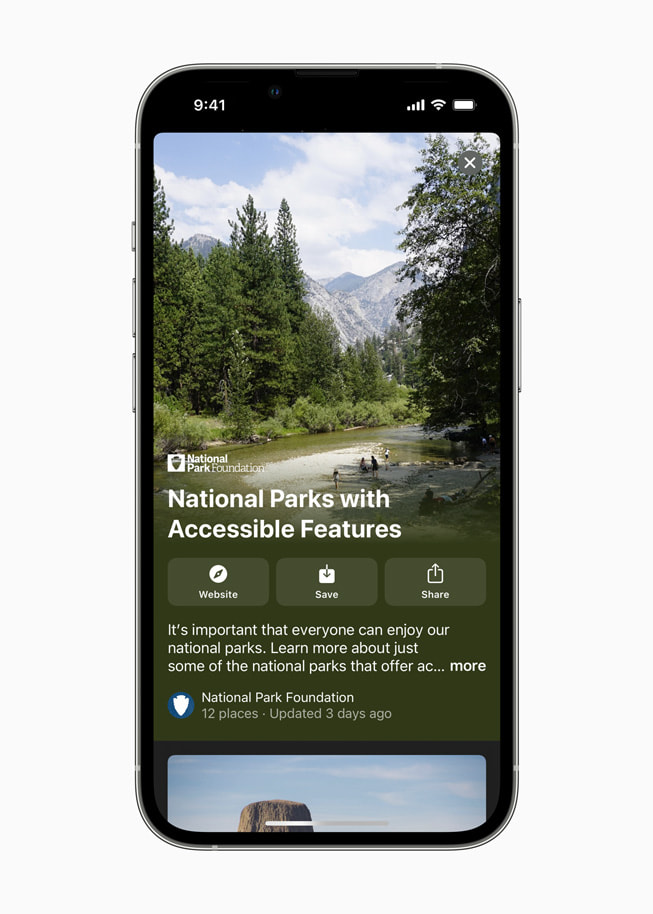

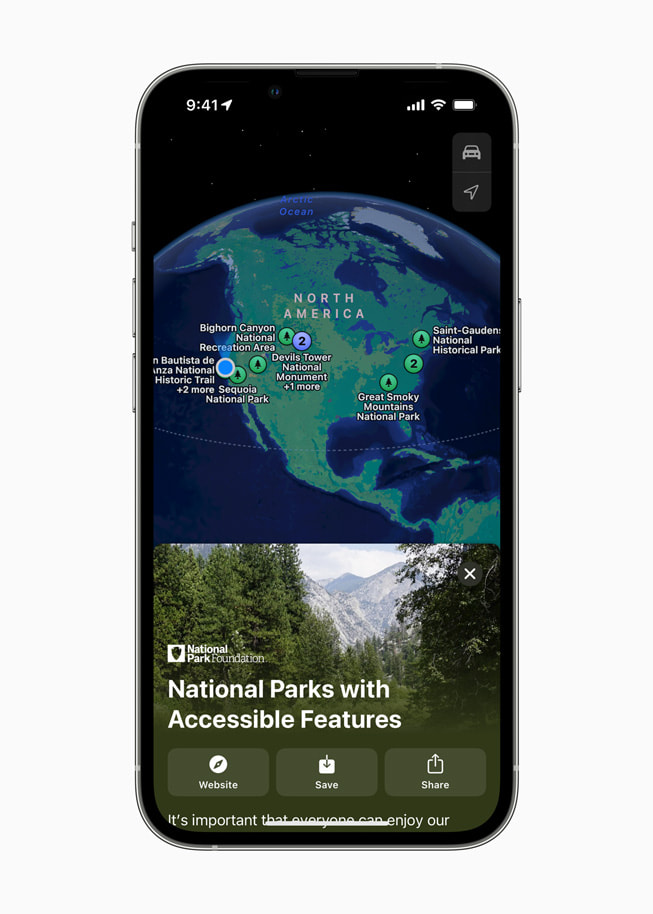

- Apple Maps features a new guide from the National Park Foundation, Park Access for All, to help users discover accessible features, programs, and services to explore in parks across the US. Guides from Gallaudet University — the world’s premier university for Deaf, hard of hearing, and Deafblind students — feature businesses and organisations that value, embrace, and prioritize the Deaf community and signed languages.

- Users can explore accessibility-focused apps and powerful stories from app creators in the App Store; check out the Transforming Our World collection in Apple Books, featuring stories by and about people with disabilities; and learn about creative ways technology is advancing accessibility in Apple Podcasts.

- Apple Music will highlight the Saylists playlists, a collection of playlists that each focus on a different sound. Choosing one and singing along is a fun and engaging way to practice vocal sounds or speech therapy.

- The Apple TV app will highlight the latest hit movies and shows featuring authentic representation of people with disabilities. Plus, viewers can explore guest-curated collections from the accessibility community’s standout actors, including Marlee Matlin (“CODA”), Lauren Ridloff (“Eternals”), Selma Blair (“Introducing, Selma Blair”), Ali Stroker (“Christmas Ever After”), and more.

Share article

Media

-

Text of this article

-

Images in this article

- Door Detection and People Detection features in Magnifier require the LiDAR Scanner on iPhone 13 Pro, iPhone 13 Pro Max, iPhone 12 Pro, iPhone 12 Pro Max, iPad Pro 11-inch (2nd and 3rd generation), and iPad Pro 12.9-inch (4th and 5th generation). Door Detection should not be relied upon in circumstances where a user may be harmed or injured, or in high-risk or emergency situations.

- Apple Watch Mirroring is available on Apple Watch Series 6 and later.

- Live Captions will be available in beta later this year in English (US, Canada) on iPhone 11 and later, iPad models with A12 Bionic and later, and Macs with Apple silicon. Accuracy of Live Captions may vary and should not be relied upon in high-risk situations.

- VoiceOver, Speak Selection, and Speak Screen will add support for Arabic (World), Basque, Bengali (India), Bhojpuri (India), Bulgarian, Catalan, Croatian, Farsi, French (Belgium), Galician, Kannada, Malay, Mandarin (Liaoning, Shaanxi, Sichuan), Marathi, Shanghainese (China), Spanish (Chile), Slovenian, Tamil, Telugu, Ukrainian, Valencian, and Vietnamese.

- Voice Control Spelling Mode is available in English (US).